This year, we are migrating from a pure Python threading–based system to ROS 2 (Robot Operating System 2) on both Barco Polo and our new boat, Crusader, leveraging lessons learned from RoboBoat 2025 and RoboSub 2025. ROS 2 allows the team to take advantage of vendor-provided packages for sensor communication and to extract standardized data formats out of the box, significantly reducing the time required to develop low-level software. This migration also enables the team to utilize a wide range of mature, open-source tools within the ROS 2 ecosystem, such as ROS 2 LiDAR clustering packages and visualization tools like Foxglove.

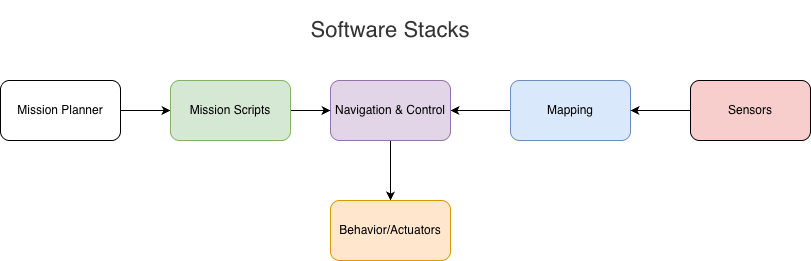

Our software architecture is divided into four main stacks: the API stack, Perception stack, Navigation stack, and Mission Planner stack. Each stack can be developed and tested independently thanks to ROS 2’s distributed architecture and its strong support for simulation and testing tools.

Overview

High-level software execution stack of our ASVs

API Stack

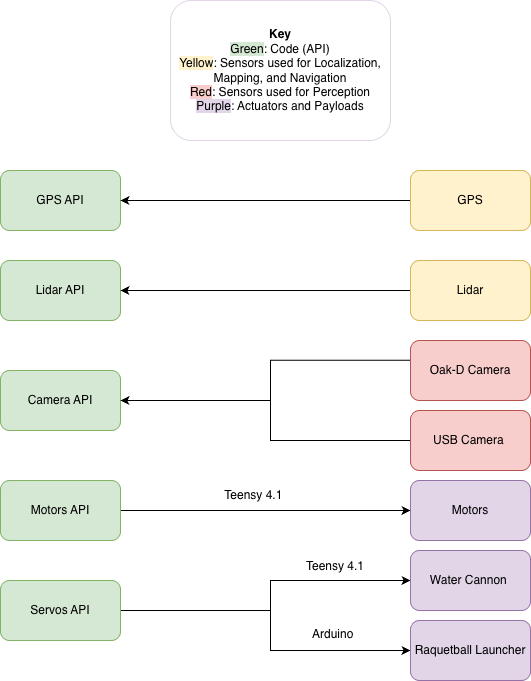

API Stack Architecture

The team created an API (Application Programming Interface) stack for actuators and sensors that doesn’t have a ROS driver in the community. For example, we made an API to read from our UM982 GPS module, communicate with our water pump, microcontroller, and custom racquetball launcher.

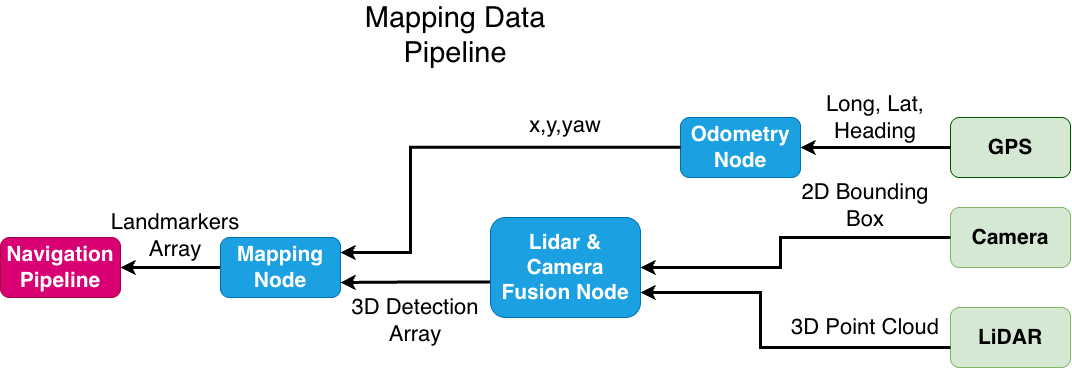

Perception & Mapping

The Perception Stack enables the robot to see the world and understand its surroundings. With RoboBoat 2026’s intense requirement on mapping, we used Livox MID-360 LiDAR combined with Depthai OAKD_LR stereo camera to help Barco Polo detect and report the location of mission elements. The team deployed YOLO v8 (You Only Look Once), a machine learning (ML) model on our camera to detect objects, and have an initial estimation of target position. The estimation is further fused with LiDAR to give a more accurate position estimate.

In order to track the position of targets while Barco Polo is traversing across the course, we implement a Kalman Filter to update our estimation of targets’ position while Barco Polo continuously perceives its surroundings.

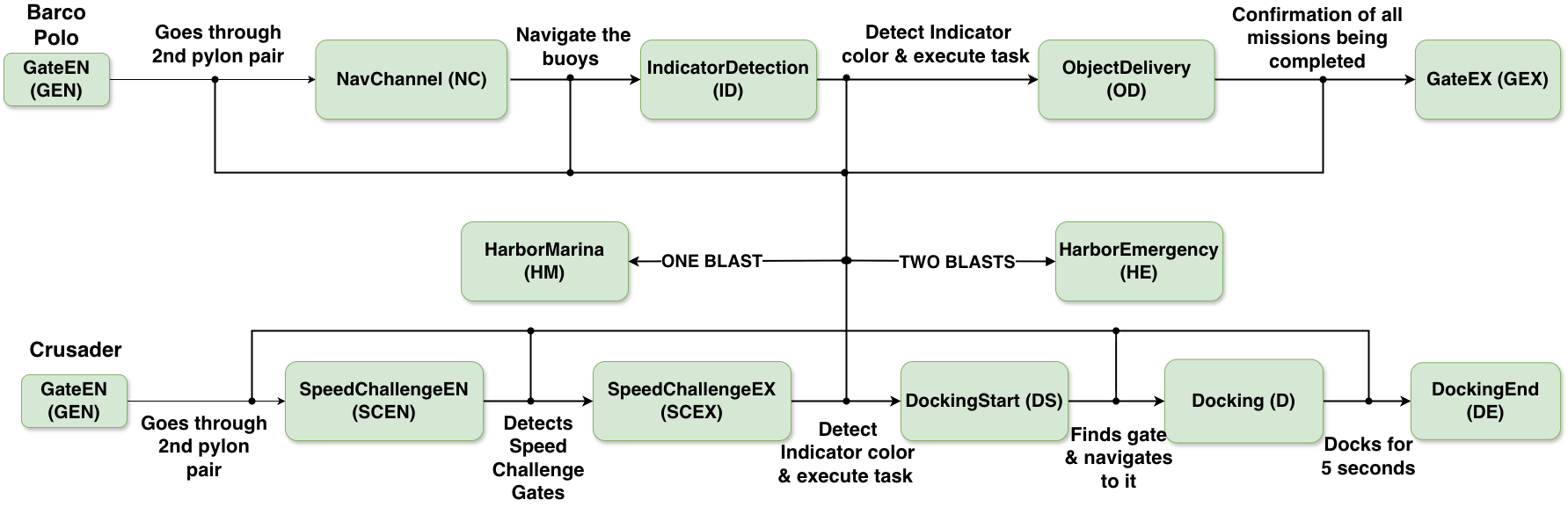

Mission Planner

The Guidance, Navigation, and Control (GNC) stack is structured into three coordinated substacks that together enable reliable autonomous motion. The Navigation substack is responsible for estimating Barco Polo’s position and orientation using differential GNSS (Global Navigation Satellite System) and LiDAR data, enabling robust waypoint-based navigation and local environment awareness. Building on this information, the Guidance substack generates collision-free paths to target waypoints using an A* path planner operating on a local costmap derived from LiDAR data. Finally, the Control substack executes the planned path using a Pure Pursuit controller, converting guidance commands into vehicle motion. This control approach allows effective path tracking without requiring a detailed hydrodynamic model of the ASV, enabling the team to tune performance parameters empirically while maintaining stable and predictable behavior.

Simulations

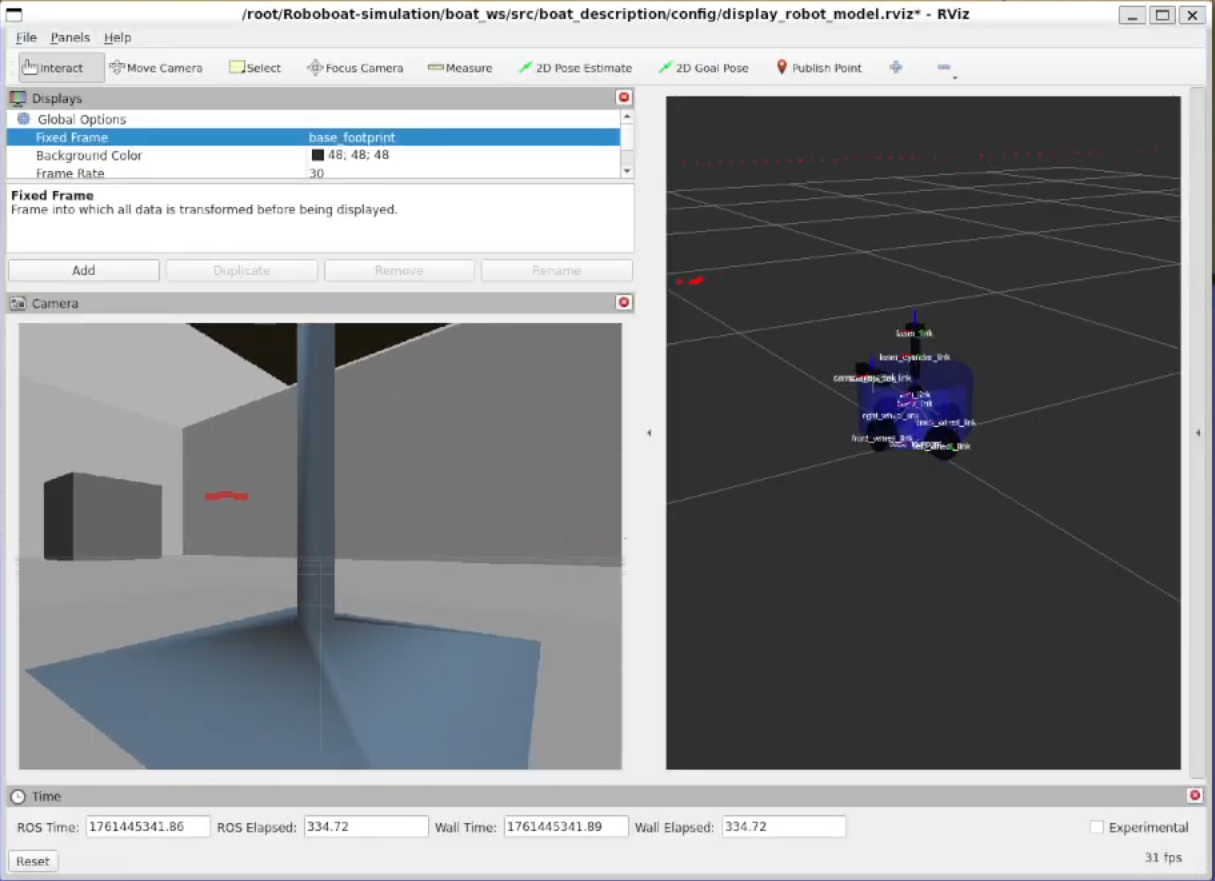

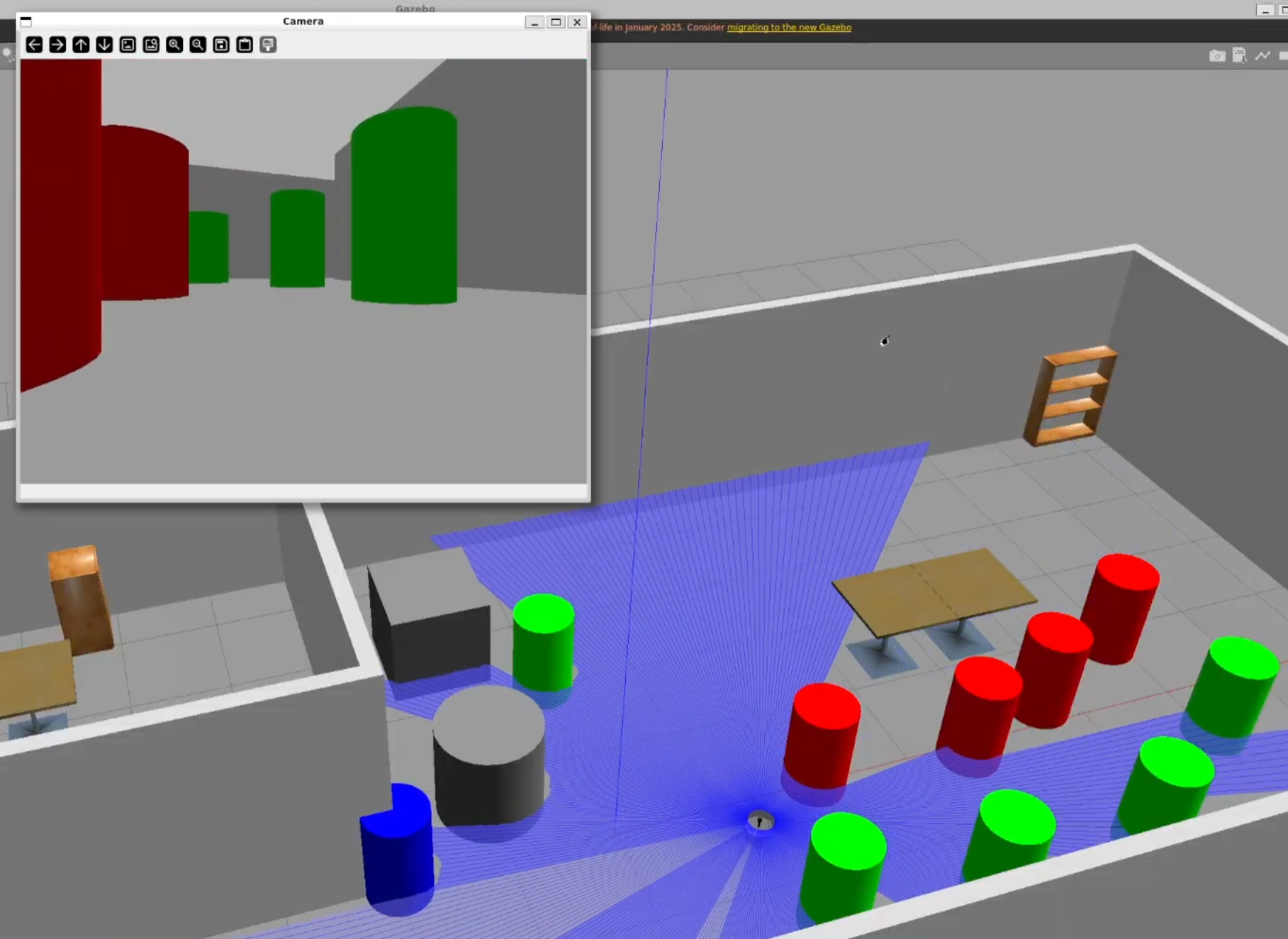

Our testing happens in both simulated worlds and on real water. This year, the team created an omni directional land robot in Gazebo, allowing members to test mission logics quickly without the dependency of physical water test.

Navigation Channel simulation

Omni-directional robot simulation